Suppose you have some dataset that has many columns (dimensions). In some scenarios it’s useful to compute an approximation of the original dataset, but which has fewer columns. This is known as dimensionality reduction.

The two most common techniques for data dimensionality reduction are using PCA (principal component analysis) and using a neural network autoencoder. I put together a neural autoencoder example using the C# language. An autoencoder is a specific kind of neural network — one that predicts its input.

The raw source data looks like:

F 24 michigan 29500.00 lib M 39 oklahoma 51200.00 mod F 63 nebraska 75800.00 con M 36 michigan 44500.00 mod F 27 nebraska 28600.00 lib . . .

There are 240 data items. I split the data into a 200-item dataset for reduction and a 40-item dataset to use for validating the autoencoder.

To use a neural network autoencoder, raw data must be encoded (categorical data to numeric) and normalized (numeric data to roughly the same range, usually -1 to +1, or 0 to 1). The demo encoded and normalized data is:

1.0000 0.2400 1.0000 0.0000 0.0000 0.2950 0.0000 0.0000 1.0000

-1.0000 0.3900 0.0000 0.0000 1.0000 0.5120 0.0000 1.0000 0.0000

1.0000 0.6300 0.0000 1.0000 0.0000 0.7580 1.0000 0.0000 0.0000

-1.0000 0.3600 1.0000 0.0000 0.0000 0.4450 0.0000 1.0000 0.0000

1.0000 0.2700 0.0000 1.0000 0.0000 0.2860 0.0000 0.0000 1.0000

. . .

Sex is encoded as M = -1 and F = 1. Age is normalized by dividing by 100. State is one-hot encoded as Michigan = 100, Nebraska = 010, Oklahoma = 001. Income is normalized by dividing by 100,000. Political leaning is one-hot encoded as conservative = 100, moderate = 010, liberal = 001.

After reduction using a 9-6-9 autoencoder, the 9 columns of the source numeric data are reduced to 6 columns:

0.0102 0.2991 -0.0517 0.0154 -0.8028 0.9672 -0.2268 0.8857 0.0029 -0.2421 0.7477 -0.9319 0.0697 -0.9168 0.2438 0.9212 0.4091 0.2533 -0.0505 0.2831 0.5931 -0.9208 0.6399 -0.2666 0.5075 0.1818 0.0889 0.9078 -0.8808 0.3985 . . .

Compared to dimensionality reduction using PCA, neural autoencoder reduction has a huge advantage that it can work with source data that has mixed categorical and numeric columns, but PCA works only with strictly numeric source data.

The reduced data can be used as an approximation for the source raw data. Common use-case examples include data visualization in a 2D graph (if the source data is reduced to 2 columns that represent the x and y coordinates), using machine learning and classical statistics techniques that only work with numeric columns, using techniques that only work well with a small number of columns, data cleaning (because the reduced data removes noise from the source data), and several advanced anomaly detection techniques that require strictly numeric data.

The demo program begins by loading the 240-item raw source data and displaying the first 5 lines:

// 1. load data

string rawFile = "..\\..\\..\\Data\\people_raw.txt";

string[] rawData = Utils.FileLoad(rawFile, "#");

Console.WriteLine("First 5 raw data: ");

for (int i = 0; i lt" 5; ++i)

Console.WriteLine(rawData[i]);

Next the 200-item normalized and encoded data and the 40-item validation data are loaded into memory and displayed:

Console.WriteLine("Loading encoded" +

" and normalized data ");

string dataFile = "..\\..\\..\\Data\\people_data.txt";

double[][] dataX = Utils.MatLoad(dataFile,

new int[] { 0, 1, 2, 3, 4, 5, 6, 7, 8 }, ',', "#");

string validationFile =

"..\\..\\..\\Data\\people_validation.txt";

double[][] validationX = Utils.MatLoad(validationFile,

new int[] { 0, 1, 2, 3, 4, 5, 6, 7, 8 }, ',', "#");

Console.WriteLine("Done ");

Console.WriteLine("First 5 data items: ");

Utils.MatShow(dataX, 4, 9, 5);

The neural autoencoder is instantiated and trained using these statements:

// 2. create and train NN autoencoder

Console.WriteLine("Creating 9-6-9 tanh-tanh" +

" autoencoder ");

NeuralNet nn = new NeuralNet(9, 6, 9, seed: 0);

Console.WriteLine("Done ");

Console.WriteLine("Preparing train parameters ");

int maxEpochs = 1000;

double lrnRate = 0.01;

int batSize = 10;

Console.WriteLine("maxEpochs = " +

maxEpochs);

Console.WriteLine("lrnRate = " +

lrnRate.ToString("F3"));

Console.WriteLine("batSize = " + batSize);

Console.WriteLine("Starting training ");

nn.Train(dataX, dataX, lrnRate, batSize, maxEpochs);

Console.WriteLine("Done ");

The number of input nodes and output nodes (9) is determined by the source normalized and encoded data. The number of hidden nodes (6) is usually about 60% to 80% of the number of input/output nodes. If the number of hidden nodes is to small, the autoencoder won’t approximae the original data well. If the number of hidden nodes is too large, the auoencoder will overfit the data and any new data arriving will not be encoded well.

The number of epochs to train, the learning rate, and the batch size, are hyperparameters that mut be determined by trial and error. This is the biggest weakness of autoencoder dimensionality reduction. The training process displays progress:

maxEpochs = 1000 lrnRate = 0.010 batSize = 10 Starting training epoch: 0 MSE = 2.3826 epoch: 100 MSE = 0.0273 epoch: 200 MSE = 0.0068 epoch: 300 MSE = 0.0043 epoch: 400 MSE = 0.0032 epoch: 500 MSE = 0.0026 epoch: 600 MSE = 0.0023 epoch: 700 MSE = 0.0021 epoch: 800 MSE = 0.0019 epoch: 900 MSE = 0.0018 Done

The MSE is the mean squared error. It’s not necessarily a good idea to try and drive MSE to 0 because the autoencoder will overfit the data.

The demo computes the MSE on the 40-item validation data:

MSE on validation data = 0.0017

The idea here is that MSE on validation data (0.0017) should be roughly comparable to the MSE on the source data (0.0018). In this demo, the two MSE values are extremely close, which is a bit unusual.

The demo concludes by applying the autoencoder to the source data to compute the reduced form, which is stored in the 6 hidden nodes of the autoencoder. The reduced data is just displayed. In a non-demo scenario, the reduced data would likely be saved to a text file.

Good fun!

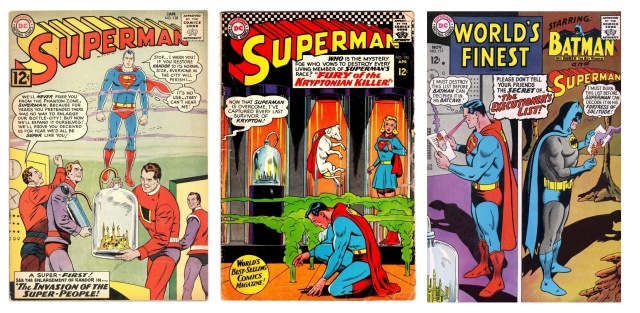

The bottle city of Kandor is an example of extreme dimensionality reduction. Kandor was a city on Superman’s home planet of Krypton. It was shrunk, stolen, and put into a bottle by the evil Brainiac some years before Krypton exploded. Superman eventually recovered the bottle but was unable to restore the city and its one million Kryptonians. The city of Kandor first appeared in a 1958 issue of Superman and then made regular appearances for the next 20 years.

Left: “The Invasion of the Super-People” from Superman #158, January 1963. Cover art by Curt Swan, my favorite comic book artist.

Center: “The Fury of the Kryptonian-Killer!”, Superman #195, April 1967. Cover art by Curt Swan.

Right: “The Executioner’s List!”, World’s Finest #171, November 1967. Cover art by Curt Swan.

Demo code. Replace “lt” (less than), “gt”, “lte”, “gte” with Boolean operator symbols (my blog editor often chokes on symbols).

using System;

using System.IO;

using System.Collections.Generic;

namespace NeuralNetworkDimReduction

{

internal class NeuralDimReductionProgram

{

static void Main(string[] args)

{

Console.WriteLine("\nBegin neural dim reduction demo ");

// 1. load data

string rawFile = "..\\..\\..\\Data\\people_raw.txt";

string[] rawData = Utils.FileLoad(rawFile, "#");

Console.WriteLine("\nFirst 5 raw data: ");

for (int i = 0; i "lt" 5; ++i)

Console.WriteLine(rawData[i]);

Console.WriteLine("\nLoading encoded" +

" and normalized data ");

string dataFile = "..\\..\\..\\Data\\people_data.txt";

double[][] dataX = Utils.MatLoad(dataFile,

new int[] { 0, 1, 2, 3, 4, 5, 6, 7, 8 }, ',', "#");

string validationFile =

"..\\..\\..\\Data\\people_validation.txt";

double[][] validationX = Utils.MatLoad(validationFile,

new int[] { 0, 1, 2, 3, 4, 5, 6, 7, 8 }, ',', "#");

Console.WriteLine("Done ");

Console.WriteLine("\nFirst 5 data items: ");

Utils.MatShow(dataX, 4, 9, 5);

// 2. create and train NN autoencoder

Console.WriteLine("\nCreating 9-6-9 tanh-tanh" +

" autoencoder ");

NeuralNet nn = new NeuralNet(9, 6, 9, seed: 0);

Console.WriteLine("Done ");

Console.WriteLine("\nPreparing train parameters ");

int maxEpochs = 1000;

double lrnRate = 0.01;

int batSize = 10;

Console.WriteLine("\nmaxEpochs = " +

maxEpochs);

Console.WriteLine("lrnRate = " +

lrnRate.ToString("F3"));

Console.WriteLine("batSize = " + batSize);

Console.WriteLine("\nStarting training ");

nn.Train(dataX, dataX, lrnRate, batSize, maxEpochs);

Console.WriteLine("Done ");

// 3. sanity check for overfitting

double validationErr = nn.Error(validationX,

validationX);

Console.WriteLine("\nMSE on validation data = " +

validationErr.ToString("F4"));

// 4. use autoencoder

Console.WriteLine("\nReducing data ");

double[][] reduced = nn.ReduceMatrix(dataX);

Console.WriteLine("\nFirst 5 reduced: ");

Utils.MatShow(reduced, 4, 9, 5);

Console.WriteLine("\nEnd demo ");

Console.ReadLine();

} // Main()

} // Program

// =========================================================

public class NeuralNet

{

private int ni; // number input nodes

private int nh;

private int no;

private double[] iNodes;

private double[][] ihWeights; // input-hidden

private double[] hBiases;

private double[] hNodes;

private double[][] hoWeights; // hidden-output

private double[] oBiases;

private double[] oNodes;

// gradients

private double[][] ihGrads;

private double[] hbGrads;

private double[][] hoGrads;

private double[] obGrads;

private Random rnd; // init wts and scramble train order

// ------------------------------------------------------

public NeuralNet(int numIn, int numHid,

int numOut, int seed)

{

this.ni = numIn;

this.nh = numHid;

this.no = numOut; // same as ni for autoencoder

this.iNodes = new double[numIn];

this.ihWeights = MatCreate(numIn, numHid);

this.hBiases = new double[numHid];

this.hNodes = new double[numHid];

this.hoWeights = MatCreate(numHid, numOut);

this.oBiases = new double[numOut];

this.oNodes = new double[numOut];

this.ihGrads = MatCreate(numIn, numHid);

this.hbGrads = new double[numHid];

this.hoGrads = MatCreate(numHid, numOut);

this.obGrads = new double[numOut];

this.rnd = new Random(seed);

this.InitWeights(); // all weights and biases

} // ctor

// ------------------------------------------------------

private void InitWeights() // helper for ctor

{

// weights and biases to small random values

double lo = -0.01; double hi = +0.01;

int numWts = (this.ni * this.nh) +

(this.nh * this.no) + this.nh + this.no;

double[] initialWeights = new double[numWts];

for (int i = 0; i "lt" initialWeights.Length; ++i)

initialWeights[i] =

(hi - lo) * rnd.NextDouble() + lo;

this.SetWeights(initialWeights);

}

// ------------------------------------------------------

public void SetWeights(double[] wts)

{

// copy serialized weights and biases in wts[]

// to ih weights, ih biases, ho weights, ho biases

int numWts = (this.ni * this.nh) +

(this.nh * this.no) + this.nh + this.no;

if (wts.Length != numWts)

throw new Exception("Bad array in SetWeights");

int k = 0; // points into wts param

for (int i = 0; i "lt" this.ni; ++i)

for (int j = 0; j "lt" this.nh; ++j)

this.ihWeights[i][j] = wts[k++];

for (int i = 0; i "lt" this.nh; ++i)

this.hBiases[i] = wts[k++];

for (int i = 0; i "lt" this.nh; ++i)

for (int j = 0; j "lt" this.no; ++j)

this.hoWeights[i][j] = wts[k++];

for (int i = 0; i "lt" this.no; ++i)

this.oBiases[i] = wts[k++];

}

// ------------------------------------------------------

public double[] GetWeights()

{

int numWts = (this.ni * this.nh) +

(this.nh * this.no) + this.nh + this.no;

double[] result = new double[numWts];

int k = 0;

for (int i = 0; i "lt" ihWeights.Length; ++i)

for (int j = 0; j "lt" this.ihWeights[0].Length; ++j)

result[k++] = this.ihWeights[i][j];

for (int i = 0; i "lt" this.hBiases.Length; ++i)

result[k++] = this.hBiases[i];

for (int i = 0; i "lt" this.hoWeights.Length; ++i)

for (int j = 0; j "lt" this.hoWeights[0].Length; ++j)

result[k++] = this.hoWeights[i][j];

for (int i = 0; i "lt" this.oBiases.Length; ++i)

result[k++] = this.oBiases[i];

return result;

}

// ------------------------------------------------------

public double[] ComputeOutput(double[] x)

{

double[] hSums = new double[this.nh]; // scratch

double[] oSums = new double[this.no]; // out sums

for (int i = 0; i "lt" x.Length; ++i)

this.iNodes[i] = x[i];

// note: usually no need to copy x-values unless

// you implement a ToString.

// more efficient to simply use the X[] directly.

// 1. compute i-h sum of weights * inputs

for (int j = 0; j "lt" this.nh; ++j)

for (int i = 0; i "lt" this.ni; ++i)

hSums[j] += this.iNodes[i] *

this.ihWeights[i][j]; // note +=

// 2. add biases to hidden sums

for (int i = 0; i "lt" this.nh; ++i)

hSums[i] += this.hBiases[i];

// 3. apply hidden activation

for (int i = 0; i "lt" this.nh; ++i)

this.hNodes[i] = HyperTan(hSums[i]);

// 4. compute h-o sum of wts * hOutputs

for (int j = 0; j "lt" this.no; ++j)

for (int i = 0; i "lt" this.nh; ++i)

oSums[j] += this.hNodes[i] *

this.hoWeights[i][j]; // [1]

// 5. add biases to output sums

for (int i = 0; i "lt" this.no; ++i)

oSums[i] += this.oBiases[i];

// 6. apply output activation

for (int i = 0; i "lt" this.no; ++i)

this.oNodes[i] = HyperTan(oSums[i]);

double[] result = new double[this.no];

for (int k = 0; k "lt" this.no; ++k)

result[k] = this.oNodes[k];

return result; // by-value avoid by-ref issues

}

// ------------------------------------------------------

private static double HyperTan(double x)

{

if (x "lt" -10.0) return -1.0;

else if (x "gt" 10.0) return 1.0;

else return Math.Tanh(x);

}

// ------------------------------------------------------

private static double LogisticSigmoid(double x)

{

if (x "lt" -10.0) return 0.0;

else if (x "gt" 10.0) return 1.0;

else return 1.0 / (1.0 + Math.Exp(-x));

}

// ------------------------------------------------------

private static double Identity(double x)

{

return x;

}

// ------------------------------------------------------

private void ZeroOutGrads()

{

for (int i = 0; i "lt" this.ni; ++i)

for (int j = 0; j "lt" this.nh; ++j)

this.ihGrads[i][j] = 0.0;

for (int j = 0; j "lt" this.nh; ++j)

this.hbGrads[j] = 0.0;

for (int j = 0; j "lt" this.nh; ++j)

for (int k = 0; k "lt" this.no; ++k)

this.hoGrads[j][k] = 0.0;

for (int k = 0; k "lt" this.no; ++k)

this.obGrads[k] = 0.0;

} // ZeroOutGrads()

// ------------------------------------------------------

private void AccumGrads(double[] y)

{

double[] oSignals = new double[this.no];

double[] hSignals = new double[this.nh];

// 1. compute output node scratch signals

for (int k = 0; k "lt" this.no; ++k)

{

//double derivative = 1.0; // Identity() activation

double derivative =

(1 - this.oNodes[k]) *

(1 + this.oNodes[k]); // tanh() activation

oSignals[k] = derivative *

(this.oNodes[k] - y[k]);

}

// 2. accum hidden-to-output gradients

for (int j = 0; j "lt" this.nh; ++j)

for (int k = 0; k "lt" this.no; ++k)

hoGrads[j][k] +=

oSignals[k] * this.hNodes[j];

// 3. accum output node bias gradients

for (int k = 0; k "lt" this.no; ++k)

obGrads[k] +=

oSignals[k] * 1.0; // 1.0 dummy

// 4. compute hidden node signals

for (int j = 0; j "lt" this.nh; ++j)

{

double sum = 0.0;

for (int k = 0; k "lt" this.no; ++k)

sum += oSignals[k] * this.hoWeights[j][k];

double derivative =

(1 - this.hNodes[j]) *

(1 + this.hNodes[j]); // assumes tanh

hSignals[j] = derivative * sum;

//double derivative =

// (this.hNodes[j]) *

// (1 - this.hNodes[j]); // assumes log-sigmoid

hSignals[j] = derivative * sum;

}

// 5. accum input-to-hidden gradients

for (int i = 0; i "lt" this.ni; ++i)

for (int j = 0; j "lt" this.nh; ++j)

this.ihGrads[i][j] +=

hSignals[j] * this.iNodes[i];

// 6. accum hidden node bias gradients

for (int j = 0; j "lt" this.nh; ++j)

this.hbGrads[j] +=

hSignals[j] * 1.0; // 1.0 dummy

} // AccumGrads

// ------------------------------------------------------

private void UpdateWeights(double lrnRate)

{

// assumes all gradients computed

// 1. update input-to-hidden weights

for (int i = 0; i "lt" this.ni; ++i)

{

for (int j = 0; j "lt" this.nh; ++j)

{

double delta = -1.0 * lrnRate *

this.ihGrads[i][j];

this.ihWeights[i][j] += delta;

}

}

// 2. update hidden node biases

for (int j = 0; j "lt" this.nh; ++j)

{

double delta = -1.0 * lrnRate *

this.hbGrads[j];

this.hBiases[j] += delta;

}

// 3. update hidden-to-output weights

for (int j = 0; j "lt" this.nh; ++j)

{

for (int k = 0; k "lt" this.no; ++k)

{

double delta = -1.0 * lrnRate *

this.hoGrads[j][k];

this.hoWeights[j][k] += delta;

}

}

// 4. update output node biases

for (int k = 0; k "lt" this.no; ++k)

{

double delta = -1.0 * lrnRate *

this.obGrads[k];

this.oBiases[k] += delta;

}

} // UpdateWeights()

// ------------------------------------------------------

public void Train(double[][] dataX, double[][] dataY,

double lrnRate, int batSize, int maxEpochs)

{

int n = dataX.Length; // 240

int batchesPerEpoch = n / batSize; // 24

int freq = maxEpochs / 10; // to show progress

int[] indices = new int[n];

for (int i = 0; i "lt" n; ++i)

indices[i] = i;

// ----------------------------------------------------

//

// if n = 200 and bs = 10

// batches per epoch = 200 / 10 = 20

// for epoch = 0; epoch "lt" maxEpochs; ++epoch

// shuffle indices

// for batch = 0; batch "lt" bpe; ++batch

// for item = 0; item "lt" bs; ++item

// compute output

// accum grads

// end-item

// update weights

// zero-out grads

// end-batches

// end-epochs

//

// ----------------------------------------------------

for (int epoch = 0; epoch "lt" maxEpochs; ++epoch)

{

Shuffle(indices);

int ptr = 0; // points into indices

for (int batIdx = 0; batIdx "lt" batchesPerEpoch;

++batIdx) // 0, 1, . . 19

{

for (int i = 0; i "lt" batSize; ++i) // 0 . . 9

{

int ii = indices[ptr++]; // compute output

double[] x = dataX[ii];

double[] y = dataY[ii];

this.ComputeOutput(x); // into this.oNoodes

this.AccumGrads(y);

}

this.UpdateWeights(lrnRate);

this.ZeroOutGrads(); // prep next batch

} // batches

if (epoch % freq == 0) // progress

{

double mse = this.Error(dataX, dataY);

string s1 = "epoch: " + epoch.ToString().

PadLeft(4);

string s2 = " MSE = " + mse.ToString("F4");

Console.WriteLine(s1 + s2);

}

} // epoch

} // Train

// ------------------------------------------------------

public double[] ReduceVector(double[] x)

{

double[] result = new double[this.nh];

this.ComputeOutput(x);

for (int j = 0; j "lt" this.nh; ++j)

result[j] = this.hNodes[j];

return result;

}

// -------------------------------------------------------

public double[][] ReduceMatrix(double[][] X)

{

int nRows = X.Length;

int nCols = this.nh;

double[][] result = MatCreate(nRows, nCols);

for (int i = 0; i "lt" nRows; ++i)

{

double[] x = X[i];

double[] reduced = this.ReduceVector(x);

for (int j = 0; j "lt" nCols; ++j)

result[i][j] = reduced[j];

}

return result;

}

// -------------------------------------------------------

private void Shuffle(int[] sequence)

{

for (int i = 0; i "lt" sequence.Length; ++i)

{

int r = this.rnd.Next(i, sequence.Length);

int tmp = sequence[r];

sequence[r] = sequence[i];

sequence[i] = tmp;

//sequence[i] = i; // for testing

}

} // Shuffle

// ------------------------------------------------------

public double Error(double[][] dataX,

double[][] dataY)

{

// MSE

int n = dataX.Length;

double sumSquaredError = 0.0;

for (int i = 0; i "lt" n; ++i)

{

double[] predY = this.ComputeOutput(dataX[i]);

double[] actualY = dataY[i];

for (int k = 0; k "lt" predY.Length; ++k)

{

sumSquaredError += (predY[k] - actualY[k]) *

(predY[k] - actualY[k]);

}

}

return sumSquaredError / n;

} // Error

// ------------------------------------------------------

public void SaveWeights(string fn)

{

FileStream ofs = new FileStream(fn, FileMode.Create);

StreamWriter sw = new StreamWriter(ofs);

double[] wts = this.GetWeights();

for (int i = 0; i "lt" wts.Length; ++i)

sw.WriteLine(wts[i].ToString("F8")); // one per line

sw.Close();

ofs.Close();

}

public void LoadWeights(string fn)

{

FileStream ifs = new FileStream(fn, FileMode.Open);

StreamReader sr = new StreamReader(ifs);

List"lt"double"gt" listWts = new List"lt"double"gt"();

string line = ""; // one wt per line

while ((line = sr.ReadLine()) != null)

{

// if (line.StartsWith(comment) == true)

// continue;

listWts.Add(double.Parse(line));

}

sr.Close();

ifs.Close();

double[] wts = listWts.ToArray();

this.SetWeights(wts);

}

private static double[][] MatCreate(int nRows, int nCols)

{

double[][] result = new double[nRows][];

for (int i = 0; i "lt" nRows; ++i)

result[i] = new double[nCols];

return result;

}

// ------------------------------------------------------

} // NeuralNetwork class

// =========================================================

// ---------------------------------------------------------

// helpers for Main()

// ---------------------------------------------------------

public class Utils

{

//public static double[][] VecToMat(double[] vec,

// int nRows, int nCols)

//{

// // vector to row vec/matrix

// double[][] result = new double[nRows][];

// for (int i = 0; i "lt" nRows; ++i)

// result[i] = new double[nCols];

// int k = 0;

// for (int i = 0; i "lt" nRows; ++i)

// for (int j = 0; j "lt" nCols; ++j)

// result[i][j] = vec[k++];

// return result;

//}

// ------------------------------------------------------

//public static double[][] MatCreate(int rows,

// int cols)

//{

// double[][] result = new double[rows][];

// for (int i = 0; i "lt" rows; ++i)

// result[i] = new double[cols];

// return result;

//}

// ------------------------------------------------------

public static string[] FileLoad(string fn,

string comment)

{

List"lt"string"gt" lst = new List"lt"string"gt"();

FileStream ifs = new FileStream(fn, FileMode.Open);

StreamReader sr = new StreamReader(ifs);

string line = "";

while ((line = sr.ReadLine()) != null)

{

if (line.StartsWith(comment)) continue;

line = line.Trim();

lst.Add(line);

}

sr.Close(); ifs.Close();

string[] result = lst.ToArray();

return result;

}

// ------------------------------------------------------

public static double[][] MatLoad(string fn,

int[] usecols, char sep, string comment)

{

List"lt"double[]"gt" result = new List"lt"double[]"gt"();

string line = "";

FileStream ifs = new FileStream(fn, FileMode.Open);

StreamReader sr = new StreamReader(ifs);

while ((line = sr.ReadLine()) != null)

{

if (line.StartsWith(comment) == true)

continue;

string[] tokens = line.Split(sep);

List"lt"double"gt" lst = new List"lt"double"gt"();

for (int j = 0; j "lt" usecols.Length; ++j)

lst.Add(double.Parse(tokens[usecols[j]]));

double[] row = lst.ToArray();

result.Add(row);

}

return result.ToArray();

}

// ------------------------------------------------------

//public static double[] MatToVec(double[][] m)

//{

// int rows = m.Length;

// int cols = m[0].Length;

// double[] result = new double[rows * cols];

// int k = 0;

// for (int i = 0; i "lt" rows; ++i)

// for (int j = 0; j "lt" cols; ++j)

// result[k++] = m[i][j];

// return result;

//}

// ------------------------------------------------------

public static void MatShow(double[][] m,

int dec, int wid, int nRows)

{

for (int i = 0; i "lt" nRows; ++i)

{

for (int j = 0; j "lt" m[0].Length; ++j)

{

double v = m[i][j];

if (Math.Abs(v) "lt" 1.0e-8) v = 0.0; // hack

Console.Write(v.ToString("F" +

dec).PadLeft(wid));

}

Console.WriteLine("");

}

if (nRows "lt" m.Length)

Console.WriteLine(". . .");

}

// ------------------------------------------------------

//public static void VecShow(int[] vec, int wid)

//{

// for (int i = 0; i "lt" vec.Length; ++i)

// Console.Write(vec[i].ToString().PadLeft(wid));

// Console.WriteLine("");

//}

// ------------------------------------------------------

//public static void VecShow(double[] vec,

// int dec, int wid, bool newLine)

//{

// for (int i = 0; i "lt" vec.Length; ++i)

// {

// double x = vec[i];

// if (Math.Abs(x) "lt" 1.0e-8) x = 0.0;

// Console.Write(x.ToString("F" +

// dec).PadLeft(wid));

// }

// if (newLine == true)

// Console.WriteLine("");

//}

} // Utils class

// =========================================================

} // ns

Raw data:

# people_raw.txt # sex, age, state, income, politics # F 24 michigan 29500.00 lib M 39 oklahoma 51200.00 mod F 63 nebraska 75800.00 con M 36 michigan 44500.00 mod F 27 nebraska 28600.00 lib F 50 nebraska 56500.00 mod F 50 oklahoma 55000.00 mod M 19 oklahoma 32700.00 con F 22 nebraska 27700.00 mod M 39 oklahoma 47100.00 lib F 34 michigan 39400.00 mod M 22 michigan 33500.00 con F 35 oklahoma 35200.00 lib M 33 nebraska 46400.00 mod F 45 nebraska 54100.00 mod F 42 nebraska 50700.00 mod M 33 nebraska 46800.00 mod F 25 oklahoma 30000.00 mod M 31 nebraska 46400.00 con F 27 michigan 32500.00 lib F 48 michigan 54000.00 mod M 64 nebraska 71300.00 lib F 61 nebraska 72400.00 con F 54 oklahoma 61000.00 con F 29 michigan 36300.00 con F 50 oklahoma 55000.00 mod F 55 oklahoma 62500.00 con F 40 michigan 52400.00 con F 22 michigan 23600.00 lib F 68 nebraska 78400.00 con M 60 michigan 71700.00 lib M 34 oklahoma 46500.00 mod M 25 oklahoma 37100.00 con M 31 nebraska 48900.00 mod F 43 oklahoma 48000.00 mod F 58 nebraska 65400.00 lib M 55 nebraska 60700.00 lib M 43 nebraska 51100.00 mod M 43 oklahoma 53200.00 mod M 21 michigan 37200.00 con F 55 oklahoma 64600.00 con F 64 nebraska 74800.00 con M 41 michigan 58800.00 mod F 64 oklahoma 72700.00 con M 56 oklahoma 66600.00 lib F 31 oklahoma 36000.00 mod M 65 oklahoma 70100.00 lib F 55 oklahoma 64300.00 con M 25 michigan 40300.00 con F 46 oklahoma 51000.00 mod M 36 michigan 53500.00 con F 52 nebraska 58100.00 mod F 61 oklahoma 67900.00 con F 57 oklahoma 65700.00 con M 46 nebraska 52600.00 mod M 62 michigan 66800.00 lib F 55 oklahoma 62700.00 con M 22 oklahoma 27700.00 mod M 50 michigan 62900.00 con M 32 nebraska 41800.00 mod M 21 oklahoma 35600.00 con F 44 nebraska 52000.00 mod F 46 nebraska 51700.00 mod F 62 nebraska 69700.00 con F 57 nebraska 66400.00 con M 67 oklahoma 75800.00 lib F 29 michigan 34300.00 lib F 53 michigan 60100.00 con M 44 michigan 54800.00 mod F 46 nebraska 52300.00 mod M 20 nebraska 30100.00 mod M 38 michigan 53500.00 mod F 50 nebraska 58600.00 mod F 33 nebraska 42500.00 mod M 33 nebraska 39300.00 mod F 26 nebraska 40400.00 con F 58 michigan 70700.00 con F 43 oklahoma 48000.00 mod M 46 michigan 64400.00 con F 60 michigan 71700.00 con M 42 michigan 48900.00 mod M 56 oklahoma 56400.00 lib M 62 nebraska 66300.00 lib M 50 michigan 64800.00 mod F 47 oklahoma 52000.00 mod M 67 nebraska 80400.00 lib M 40 oklahoma 50400.00 mod F 42 nebraska 48400.00 mod F 64 michigan 72000.00 con M 47 michigan 58700.00 lib F 45 nebraska 52800.00 mod M 25 oklahoma 40900.00 con F 38 michigan 48400.00 con F 55 oklahoma 60000.00 mod M 44 michigan 60600.00 mod F 33 michigan 41000.00 mod F 34 oklahoma 39000.00 mod F 27 nebraska 33700.00 lib F 32 nebraska 40700.00 mod F 42 oklahoma 47000.00 mod M 24 oklahoma 40300.00 con F 42 nebraska 50300.00 mod F 25 oklahoma 28000.00 lib F 51 nebraska 58000.00 mod M 55 nebraska 63500.00 lib F 44 michigan 47800.00 lib M 18 michigan 39800.00 con M 67 nebraska 71600.00 lib F 45 oklahoma 50000.00 mod F 48 michigan 55800.00 mod M 25 nebraska 39000.00 mod M 67 michigan 78300.00 mod F 37 oklahoma 42000.00 mod M 32 michigan 42700.00 mod F 48 michigan 57000.00 mod M 66 oklahoma 75000.00 lib F 61 michigan 70000.00 con M 58 oklahoma 68900.00 mod F 19 michigan 24000.00 lib F 38 oklahoma 43000.00 mod M 27 michigan 36400.00 mod F 42 michigan 48000.00 mod F 60 michigan 71300.00 con M 27 oklahoma 34800.00 con F 29 nebraska 37100.00 con M 43 michigan 56700.00 mod F 48 michigan 56700.00 mod F 27 oklahoma 29400.00 lib M 44 michigan 55200.00 con F 23 nebraska 26300.00 lib M 36 nebraska 53000.00 lib F 64 oklahoma 72500.00 con F 29 oklahoma 30000.00 lib M 33 michigan 49300.00 mod M 66 nebraska 75000.00 lib M 21 oklahoma 34300.00 con F 27 michigan 32700.00 lib F 29 michigan 31800.00 lib M 31 michigan 48600.00 mod F 36 oklahoma 41000.00 mod F 49 nebraska 55700.00 mod M 28 michigan 38400.00 con M 43 oklahoma 56600.00 mod M 46 nebraska 58800.00 mod F 57 michigan 69800.00 con M 52 oklahoma 59400.00 mod M 31 oklahoma 43500.00 mod M 55 michigan 62000.00 lib F 50 michigan 56400.00 mod F 48 nebraska 55900.00 mod M 22 oklahoma 34500.00 con F 59 oklahoma 66700.00 con F 34 michigan 42800.00 lib M 64 michigan 77200.00 lib F 29 oklahoma 33500.00 lib M 34 nebraska 43200.00 mod M 61 michigan 75000.00 lib F 64 oklahoma 71100.00 con M 29 michigan 41300.00 con F 63 nebraska 70600.00 con M 29 nebraska 40000.00 con M 51 michigan 62700.00 mod M 24 oklahoma 37700.00 con F 48 nebraska 57500.00 mod F 18 michigan 27400.00 con F 18 michigan 20300.00 lib F 33 nebraska 38200.00 lib M 20 oklahoma 34800.00 con F 29 oklahoma 33000.00 lib M 44 oklahoma 63000.00 con M 65 oklahoma 81800.00 con M 56 michigan 63700.00 lib M 52 oklahoma 58400.00 mod M 29 nebraska 48600.00 con M 47 nebraska 58900.00 mod F 68 michigan 72600.00 lib F 31 oklahoma 36000.00 mod F 61 nebraska 62500.00 lib F 19 nebraska 21500.00 lib F 38 oklahoma 43000.00 mod M 26 michigan 42300.00 con F 61 nebraska 67400.00 con F 40 michigan 46500.00 mod M 49 michigan 65200.00 mod F 56 michigan 67500.00 con M 48 nebraska 66000.00 mod F 52 michigan 56300.00 lib M 18 michigan 29800.00 con M 56 oklahoma 59300.00 lib M 52 nebraska 64400.00 mod M 18 nebraska 28600.00 mod M 58 michigan 66200.00 lib M 39 nebraska 55100.00 mod M 46 michigan 62900.00 mod M 40 nebraska 46200.00 mod M 60 michigan 72700.00 lib F 36 nebraska 40700.00 lib F 44 michigan 52300.00 mod F 28 michigan 31300.00 lib F 54 oklahoma 62600.00 con M 51 michigan 61200.00 mod M 32 nebraska 46100.00 mod F 55 michigan 62700.00 con F 25 oklahoma 26200.00 lib F 33 oklahoma 37300.00 lib M 29 nebraska 46200.00 con F 65 michigan 72700.00 con M 43 nebraska 51400.00 mod M 54 nebraska 64800.00 lib F 61 nebraska 72700.00 con F 52 nebraska 63600.00 con F 30 nebraska 33500.00 lib F 29 michigan 31400.00 lib M 47 oklahoma 59400.00 mod F 39 nebraska 47800.00 mod F 47 oklahoma 52000.00 mod M 49 michigan 58600.00 mod M 63 oklahoma 67400.00 lib M 30 michigan 39200.00 con M 61 oklahoma 69600.00 lib M 47 oklahoma 58700.00 mod F 30 oklahoma 34500.00 lib M 51 oklahoma 58000.00 mod M 24 michigan 38800.00 mod M 49 michigan 64500.00 mod F 66 oklahoma 74500.00 con M 65 michigan 76900.00 con M 46 nebraska 58000.00 con M 45 oklahoma 51800.00 mod M 47 michigan 63600.00 con M 29 michigan 44800.00 con M 57 oklahoma 69300.00 lib M 20 michigan 28700.00 lib M 35 michigan 43400.00 mod M 61 oklahoma 67000.00 lib M 31 oklahoma 37300.00 mod F 18 michigan 20800.00 lib F 26 oklahoma 29200.00 lib M 28 michigan 36400.00 lib M 59 oklahoma 69400.00 lib

Normalized and encoded data:

# people_data.txt # # sex (-1 = M, 1 = F) # age / 100, # state (MI = 100, NE = 010, OK = 001), # income / 100_000, # politics # (conservative = 100, moderate = 010, # liberal = 001) # 1, 0.24, 1, 0, 0, 0.2950, 0, 0, 1 -1, 0.39, 0, 0, 1, 0.5120, 0, 1, 0 1, 0.63, 0, 1, 0, 0.7580, 1, 0, 0 -1, 0.36, 1, 0, 0, 0.4450, 0, 1, 0 1, 0.27, 0, 1, 0, 0.2860, 0, 0, 1 1, 0.50, 0, 1, 0, 0.5650, 0, 1, 0 1, 0.50, 0, 0, 1, 0.5500, 0, 1, 0 -1, 0.19, 0, 0, 1, 0.3270, 1, 0, 0 1, 0.22, 0, 1, 0, 0.2770, 0, 1, 0 -1, 0.39, 0, 0, 1, 0.4710, 0, 0, 1 1, 0.34, 1, 0, 0, 0.3940, 0, 1, 0 -1, 0.22, 1, 0, 0, 0.3350, 1, 0, 0 1, 0.35, 0, 0, 1, 0.3520, 0, 0, 1 -1, 0.33, 0, 1, 0, 0.4640, 0, 1, 0 1, 0.45, 0, 1, 0, 0.5410, 0, 1, 0 1, 0.42, 0, 1, 0, 0.5070, 0, 1, 0 -1, 0.33, 0, 1, 0, 0.4680, 0, 1, 0 1, 0.25, 0, 0, 1, 0.3000, 0, 1, 0 -1, 0.31, 0, 1, 0, 0.4640, 1, 0, 0 1, 0.27, 1, 0, 0, 0.3250, 0, 0, 1 1, 0.48, 1, 0, 0, 0.5400, 0, 1, 0 -1, 0.64, 0, 1, 0, 0.7130, 0, 0, 1 1, 0.61, 0, 1, 0, 0.7240, 1, 0, 0 1, 0.54, 0, 0, 1, 0.6100, 1, 0, 0 1, 0.29, 1, 0, 0, 0.3630, 1, 0, 0 1, 0.50, 0, 0, 1, 0.5500, 0, 1, 0 1, 0.55, 0, 0, 1, 0.6250, 1, 0, 0 1, 0.40, 1, 0, 0, 0.5240, 1, 0, 0 1, 0.22, 1, 0, 0, 0.2360, 0, 0, 1 1, 0.68, 0, 1, 0, 0.7840, 1, 0, 0 -1, 0.60, 1, 0, 0, 0.7170, 0, 0, 1 -1, 0.34, 0, 0, 1, 0.4650, 0, 1, 0 -1, 0.25, 0, 0, 1, 0.3710, 1, 0, 0 -1, 0.31, 0, 1, 0, 0.4890, 0, 1, 0 1, 0.43, 0, 0, 1, 0.4800, 0, 1, 0 1, 0.58, 0, 1, 0, 0.6540, 0, 0, 1 -1, 0.55, 0, 1, 0, 0.6070, 0, 0, 1 -1, 0.43, 0, 1, 0, 0.5110, 0, 1, 0 -1, 0.43, 0, 0, 1, 0.5320, 0, 1, 0 -1, 0.21, 1, 0, 0, 0.3720, 1, 0, 0 1, 0.55, 0, 0, 1, 0.6460, 1, 0, 0 1, 0.64, 0, 1, 0, 0.7480, 1, 0, 0 -1, 0.41, 1, 0, 0, 0.5880, 0, 1, 0 1, 0.64, 0, 0, 1, 0.7270, 1, 0, 0 -1, 0.56, 0, 0, 1, 0.6660, 0, 0, 1 1, 0.31, 0, 0, 1, 0.3600, 0, 1, 0 -1, 0.65, 0, 0, 1, 0.7010, 0, 0, 1 1, 0.55, 0, 0, 1, 0.6430, 1, 0, 0 -1, 0.25, 1, 0, 0, 0.4030, 1, 0, 0 1, 0.46, 0, 0, 1, 0.5100, 0, 1, 0 -1, 0.36, 1, 0, 0, 0.5350, 1, 0, 0 1, 0.52, 0, 1, 0, 0.5810, 0, 1, 0 1, 0.61, 0, 0, 1, 0.6790, 1, 0, 0 1, 0.57, 0, 0, 1, 0.6570, 1, 0, 0 -1, 0.46, 0, 1, 0, 0.5260, 0, 1, 0 -1, 0.62, 1, 0, 0, 0.6680, 0, 0, 1 1, 0.55, 0, 0, 1, 0.6270, 1, 0, 0 -1, 0.22, 0, 0, 1, 0.2770, 0, 1, 0 -1, 0.50, 1, 0, 0, 0.6290, 1, 0, 0 -1, 0.32, 0, 1, 0, 0.4180, 0, 1, 0 -1, 0.21, 0, 0, 1, 0.3560, 1, 0, 0 1, 0.44, 0, 1, 0, 0.5200, 0, 1, 0 1, 0.46, 0, 1, 0, 0.5170, 0, 1, 0 1, 0.62, 0, 1, 0, 0.6970, 1, 0, 0 1, 0.57, 0, 1, 0, 0.6640, 1, 0, 0 -1, 0.67, 0, 0, 1, 0.7580, 0, 0, 1 1, 0.29, 1, 0, 0, 0.3430, 0, 0, 1 1, 0.53, 1, 0, 0, 0.6010, 1, 0, 0 -1, 0.44, 1, 0, 0, 0.5480, 0, 1, 0 1, 0.46, 0, 1, 0, 0.5230, 0, 1, 0 -1, 0.20, 0, 1, 0, 0.3010, 0, 1, 0 -1, 0.38, 1, 0, 0, 0.5350, 0, 1, 0 1, 0.50, 0, 1, 0, 0.5860, 0, 1, 0 1, 0.33, 0, 1, 0, 0.4250, 0, 1, 0 -1, 0.33, 0, 1, 0, 0.3930, 0, 1, 0 1, 0.26, 0, 1, 0, 0.4040, 1, 0, 0 1, 0.58, 1, 0, 0, 0.7070, 1, 0, 0 1, 0.43, 0, 0, 1, 0.4800, 0, 1, 0 -1, 0.46, 1, 0, 0, 0.6440, 1, 0, 0 1, 0.60, 1, 0, 0, 0.7170, 1, 0, 0 -1, 0.42, 1, 0, 0, 0.4890, 0, 1, 0 -1, 0.56, 0, 0, 1, 0.5640, 0, 0, 1 -1, 0.62, 0, 1, 0, 0.6630, 0, 0, 1 -1, 0.50, 1, 0, 0, 0.6480, 0, 1, 0 1, 0.47, 0, 0, 1, 0.5200, 0, 1, 0 -1, 0.67, 0, 1, 0, 0.8040, 0, 0, 1 -1, 0.40, 0, 0, 1, 0.5040, 0, 1, 0 1, 0.42, 0, 1, 0, 0.4840, 0, 1, 0 1, 0.64, 1, 0, 0, 0.7200, 1, 0, 0 -1, 0.47, 1, 0, 0, 0.5870, 0, 0, 1 1, 0.45, 0, 1, 0, 0.5280, 0, 1, 0 -1, 0.25, 0, 0, 1, 0.4090, 1, 0, 0 1, 0.38, 1, 0, 0, 0.4840, 1, 0, 0 1, 0.55, 0, 0, 1, 0.6000, 0, 1, 0 -1, 0.44, 1, 0, 0, 0.6060, 0, 1, 0 1, 0.33, 1, 0, 0, 0.4100, 0, 1, 0 1, 0.34, 0, 0, 1, 0.3900, 0, 1, 0 1, 0.27, 0, 1, 0, 0.3370, 0, 0, 1 1, 0.32, 0, 1, 0, 0.4070, 0, 1, 0 1, 0.42, 0, 0, 1, 0.4700, 0, 1, 0 -1, 0.24, 0, 0, 1, 0.4030, 1, 0, 0 1, 0.42, 0, 1, 0, 0.5030, 0, 1, 0 1, 0.25, 0, 0, 1, 0.2800, 0, 0, 1 1, 0.51, 0, 1, 0, 0.5800, 0, 1, 0 -1, 0.55, 0, 1, 0, 0.6350, 0, 0, 1 1, 0.44, 1, 0, 0, 0.4780, 0, 0, 1 -1, 0.18, 1, 0, 0, 0.3980, 1, 0, 0 -1, 0.67, 0, 1, 0, 0.7160, 0, 0, 1 1, 0.45, 0, 0, 1, 0.5000, 0, 1, 0 1, 0.48, 1, 0, 0, 0.5580, 0, 1, 0 -1, 0.25, 0, 1, 0, 0.3900, 0, 1, 0 -1, 0.67, 1, 0, 0, 0.7830, 0, 1, 0 1, 0.37, 0, 0, 1, 0.4200, 0, 1, 0 -1, 0.32, 1, 0, 0, 0.4270, 0, 1, 0 1, 0.48, 1, 0, 0, 0.5700, 0, 1, 0 -1, 0.66, 0, 0, 1, 0.7500, 0, 0, 1 1, 0.61, 1, 0, 0, 0.7000, 1, 0, 0 -1, 0.58, 0, 0, 1, 0.6890, 0, 1, 0 1, 0.19, 1, 0, 0, 0.2400, 0, 0, 1 1, 0.38, 0, 0, 1, 0.4300, 0, 1, 0 -1, 0.27, 1, 0, 0, 0.3640, 0, 1, 0 1, 0.42, 1, 0, 0, 0.4800, 0, 1, 0 1, 0.60, 1, 0, 0, 0.7130, 1, 0, 0 -1, 0.27, 0, 0, 1, 0.3480, 1, 0, 0 1, 0.29, 0, 1, 0, 0.3710, 1, 0, 0 -1, 0.43, 1, 0, 0, 0.5670, 0, 1, 0 1, 0.48, 1, 0, 0, 0.5670, 0, 1, 0 1, 0.27, 0, 0, 1, 0.2940, 0, 0, 1 -1, 0.44, 1, 0, 0, 0.5520, 1, 0, 0 1, 0.23, 0, 1, 0, 0.2630, 0, 0, 1 -1, 0.36, 0, 1, 0, 0.5300, 0, 0, 1 1, 0.64, 0, 0, 1, 0.7250, 1, 0, 0 1, 0.29, 0, 0, 1, 0.3000, 0, 0, 1 -1, 0.33, 1, 0, 0, 0.4930, 0, 1, 0 -1, 0.66, 0, 1, 0, 0.7500, 0, 0, 1 -1, 0.21, 0, 0, 1, 0.3430, 1, 0, 0 1, 0.27, 1, 0, 0, 0.3270, 0, 0, 1 1, 0.29, 1, 0, 0, 0.3180, 0, 0, 1 -1, 0.31, 1, 0, 0, 0.4860, 0, 1, 0 1, 0.36, 0, 0, 1, 0.4100, 0, 1, 0 1, 0.49, 0, 1, 0, 0.5570, 0, 1, 0 -1, 0.28, 1, 0, 0, 0.3840, 1, 0, 0 -1, 0.43, 0, 0, 1, 0.5660, 0, 1, 0 -1, 0.46, 0, 1, 0, 0.5880, 0, 1, 0 1, 0.57, 1, 0, 0, 0.6980, 1, 0, 0 -1, 0.52, 0, 0, 1, 0.5940, 0, 1, 0 -1, 0.31, 0, 0, 1, 0.4350, 0, 1, 0 -1, 0.55, 1, 0, 0, 0.6200, 0, 0, 1 1, 0.50, 1, 0, 0, 0.5640, 0, 1, 0 1, 0.48, 0, 1, 0, 0.5590, 0, 1, 0 -1, 0.22, 0, 0, 1, 0.3450, 1, 0, 0 1, 0.59, 0, 0, 1, 0.6670, 1, 0, 0 1, 0.34, 1, 0, 0, 0.4280, 0, 0, 1 -1, 0.64, 1, 0, 0, 0.7720, 0, 0, 1 1, 0.29, 0, 0, 1, 0.3350, 0, 0, 1 -1, 0.34, 0, 1, 0, 0.4320, 0, 1, 0 -1, 0.61, 1, 0, 0, 0.7500, 0, 0, 1 1, 0.64, 0, 0, 1, 0.7110, 1, 0, 0 -1, 0.29, 1, 0, 0, 0.4130, 1, 0, 0 1, 0.63, 0, 1, 0, 0.7060, 1, 0, 0 -1, 0.29, 0, 1, 0, 0.4000, 1, 0, 0 -1, 0.51, 1, 0, 0, 0.6270, 0, 1, 0 -1, 0.24, 0, 0, 1, 0.3770, 1, 0, 0 1, 0.48, 0, 1, 0, 0.5750, 0, 1, 0 1, 0.18, 1, 0, 0, 0.2740, 1, 0, 0 1, 0.18, 1, 0, 0, 0.2030, 0, 0, 1 1, 0.33, 0, 1, 0, 0.3820, 0, 0, 1 -1, 0.20, 0, 0, 1, 0.3480, 1, 0, 0 1, 0.29, 0, 0, 1, 0.3300, 0, 0, 1 -1, 0.44, 0, 0, 1, 0.6300, 1, 0, 0 -1, 0.65, 0, 0, 1, 0.8180, 1, 0, 0 -1, 0.56, 1, 0, 0, 0.6370, 0, 0, 1 -1, 0.52, 0, 0, 1, 0.5840, 0, 1, 0 -1, 0.29, 0, 1, 0, 0.4860, 1, 0, 0 -1, 0.47, 0, 1, 0, 0.5890, 0, 1, 0 1, 0.68, 1, 0, 0, 0.7260, 0, 0, 1 1, 0.31, 0, 0, 1, 0.3600, 0, 1, 0 1, 0.61, 0, 1, 0, 0.6250, 0, 0, 1 1, 0.19, 0, 1, 0, 0.2150, 0, 0, 1 1, 0.38, 0, 0, 1, 0.4300, 0, 1, 0 -1, 0.26, 1, 0, 0, 0.4230, 1, 0, 0 1, 0.61, 0, 1, 0, 0.6740, 1, 0, 0 1, 0.40, 1, 0, 0, 0.4650, 0, 1, 0 -1, 0.49, 1, 0, 0, 0.6520, 0, 1, 0 1, 0.56, 1, 0, 0, 0.6750, 1, 0, 0 -1, 0.48, 0, 1, 0, 0.6600, 0, 1, 0 1, 0.52, 1, 0, 0, 0.5630, 0, 0, 1 -1, 0.18, 1, 0, 0, 0.2980, 1, 0, 0 -1, 0.56, 0, 0, 1, 0.5930, 0, 0, 1 -1, 0.52, 0, 1, 0, 0.6440, 0, 1, 0 -1, 0.18, 0, 1, 0, 0.2860, 0, 1, 0 -1, 0.58, 1, 0, 0, 0.6620, 0, 0, 1 -1, 0.39, 0, 1, 0, 0.5510, 0, 1, 0 -1, 0.46, 1, 0, 0, 0.6290, 0, 1, 0 -1, 0.40, 0, 1, 0, 0.4620, 0, 1, 0 -1, 0.60, 1, 0, 0, 0.7270, 0, 0, 1 1, 0.36, 0, 1, 0, 0.4070, 0, 0, 1 1, 0.44, 1, 0, 0, 0.5230, 0, 1, 0 1, 0.28, 1, 0, 0, 0.3130, 0, 0, 1 1, 0.54, 0, 0, 1, 0.6260, 1, 0, 0

Validation data:

# people_validation.txt # -1, 0.51, 1, 0, 0, 0.6120, 0, 1, 0 -1, 0.32, 0, 1, 0, 0.4610, 0, 1, 0 1, 0.55, 1, 0, 0, 0.6270, 1, 0, 0 1, 0.25, 0, 0, 1, 0.2620, 0, 0, 1 1, 0.33, 0, 0, 1, 0.3730, 0, 0, 1 -1, 0.29, 0, 1, 0, 0.4620, 1, 0, 0 1, 0.65, 1, 0, 0, 0.7270, 1, 0, 0 -1, 0.43, 0, 1, 0, 0.5140, 0, 1, 0 -1, 0.54, 0, 1, 0, 0.6480, 0, 0, 1 1, 0.61, 0, 1, 0, 0.7270, 1, 0, 0 1, 0.52, 0, 1, 0, 0.6360, 1, 0, 0 1, 0.30, 0, 1, 0, 0.3350, 0, 0, 1 1, 0.29, 1, 0, 0, 0.3140, 0, 0, 1 -1, 0.47, 0, 0, 1, 0.5940, 0, 1, 0 1, 0.39, 0, 1, 0, 0.4780, 0, 1, 0 1, 0.47, 0, 0, 1, 0.5200, 0, 1, 0 -1, 0.49, 1, 0, 0, 0.5860, 0, 1, 0 -1, 0.63, 0, 0, 1, 0.6740, 0, 0, 1 -1, 0.30, 1, 0, 0, 0.3920, 1, 0, 0 -1, 0.61, 0, 0, 1, 0.6960, 0, 0, 1 -1, 0.47, 0, 0, 1, 0.5870, 0, 1, 0 1, 0.30, 0, 0, 1, 0.3450, 0, 0, 1 -1, 0.51, 0, 0, 1, 0.5800, 0, 1, 0 -1, 0.24, 1, 0, 0, 0.3880, 0, 1, 0 -1, 0.49, 1, 0, 0, 0.6450, 0, 1, 0 1, 0.66, 0, 0, 1, 0.7450, 1, 0, 0 -1, 0.65, 1, 0, 0, 0.7690, 1, 0, 0 -1, 0.46, 0, 1, 0, 0.5800, 1, 0, 0 -1, 0.45, 0, 0, 1, 0.5180, 0, 1, 0 -1, 0.47, 1, 0, 0, 0.6360, 1, 0, 0 -1, 0.29, 1, 0, 0, 0.4480, 1, 0, 0 -1, 0.57, 0, 0, 1, 0.6930, 0, 0, 1 -1, 0.20, 1, 0, 0, 0.2870, 0, 0, 1 -1, 0.35, 1, 0, 0, 0.4340, 0, 1, 0 -1, 0.61, 0, 0, 1, 0.6700, 0, 0, 1 -1, 0.31, 0, 0, 1, 0.3730, 0, 1, 0 1, 0.18, 1, 0, 0, 0.2080, 0, 0, 1 1, 0.26, 0, 0, 1, 0.2920, 0, 0, 1 -1, 0.28, 1, 0, 0, 0.3640, 0, 0, 1 -1, 0.59, 0, 0, 1, 0.6940, 0, 0, 1

.NET Test Automation Recipes

.NET Test Automation Recipes Software Testing

Software Testing SciPy Programming Succinctly

SciPy Programming Succinctly Keras Succinctly

Keras Succinctly R Programming

R Programming 2024 Visual Studio Live Conference

2024 Visual Studio Live Conference 2024 Predictive Analytics World

2024 Predictive Analytics World 2024 DevIntersection Conference

2024 DevIntersection Conference 2024 Spring MLADS Conference

2024 Spring MLADS Conference 2024 G2E Conference

2024 G2E Conference 2024 ISC West Conference

2024 ISC West Conference

You must be logged in to post a comment.